What are the various methods of testing and evaluating/validating a model?

Evaluating the performance of a model is one of the most important stages in predictive modelling, it indicates how successful model has been for the dataset. It enables to tune parameters and in the end test the tuned model against a fresh cut of data. In this article, we will learn about the some of the mostly used methods for testing and validating a machine learning model.

Below we will look at few most common validation metrics used for predictive modelling. The choice of metrics influences how you weight the importance of different characteristics in the results and your ultimate choice of which machine learning algorithm to choose. Before we move on to a variety of metrics let’s get basics right.

The most important thing to be kept in mind is that the training and the test data must be different. What it means is, always leave cut of data that are not included in training to test your model against after fitting/tuning is finished.

There is a very simple way to split train and test data using Scikit-Learn:

from sklearn import datasets

from sklearn.cross_validation import train_test_split

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

print('Full dataset, features:',len(X))

print('Full dataset, labels:',len(y))

print('Train dataset, features:',len(X_train))

print('Test dataset, features:',len(X_test))

print('Train dataset, labels:',len(y_train))

print('Test dataset, features:',len(y_test))

In some cases, it may seem like ‘losing’ part of the training set, especially when data sample is not large enough. There are ways around it as well, one of most popular is ‘Cross Validation’.

Cross Validation

It is simple ‘trick’ that splits data into n equal parts. Then successively hold out each part and fit the model using the rest. This gives n estimates of model performance that can be combined into an overall measure. Although very ‘heavy’ from computing point of view, very efficient and widely used method to avoid overfitting and improve ‘out of sample’ performance of the model.

Here is an example of Cross Validation using Scikit-Learn:

from sklearn import cross_validation

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

num_instances = len(X_train)

kfold = cross_validation.KFold(len(X_train),n_folds=10, random_state=1)

model = LogisticRegression()

preds = cross_validation.cross_val_score(model, X_train, y_train, cv=kfold)

print(preds.mean(), preds.std())

Two main types of predictive models.

Talking about a predictive modeling, there are two main types of problems to be solved:

- Regression problems are those where you are trying to predict or explain one thing (dependent variable) using other things (independent variables) with continuous output eg exact price of a stock next day.

- Classification problems try to determine group membership by deriving probabilities eg. will the stock price go up/down or will not change next day. Algorithms like SVM and KNN create a class output. Algorithms like Logistic Regression, Random Forest, Gradient Boosting, Adaboost etc. give probability outputs. Converting probability outputs to class output is just a matter of creating a threshold probability.

In regression problems, we do not have such inconsistencies in output. The output is always continuous in nature and requires no further treatment.

Techniques/metrics for model validation.

Having the dataset divided, and model fitted there is a question, what kind of quantifiable validation metrics to use. There are few very basic quick and dirty methods to check performance. One of them is value range – if model outputs are far outside of the response variable range, that would immediately indicate poor estimation or model inaccuracy.

Most often there is a need to use something more sophisticated and ‘scientific’.

Accuracy

Accuracy is a classification metric, it the number of correct predictions made as a ratio of all predictions. Probably it is the most common evaluation metric for classification problems.

Below is an example of calculating classification accuracy using Scikit-Learn.

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.metrics import accuracy_score

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

model = LogisticRegression()

model.fit(X_train,y_train)

preds = model.predict(X_test)

print(accuracy_score(preds,y_test))

Precision

Precision is the ratio of correctly predicted positive observations to the total predicted positive observations. The question that this metric answer is of all passengers that labeled as survived, how many actually survived? High precision relates to the low false positive rate.

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.metrics import precision_score

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

model = LogisticRegression()

model.fit(X_train,y_train)

preds = model.predict(X_test)

print(precision_score(preds,y_test, average=None))Sensitivity or Recall

Recall (Sensitivity) – is the ratio of correctly predicted positive observations to all observations in actual class.

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.metrics import recall_score

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

model = LogisticRegression()

model.fit(X_train,y_train)

preds = model.predict(X_test)

print(recall_score(preds,y_test, average=None))

F1 score

F1 Score is the weighted average of Precision and Recall. Therefore, this score takes both false positives and false negatives into account. Intuitively it is not as easy to understand as accuracy, but F1 is usually more useful than accuracy, especially if you have an uneven class distribution.

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.metrics import f1_score

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

model = LogisticRegression()

model.fit(X_train,y_train)

preds = model.predict(X_test)

print(f1_score(preds,y_test, average=None))Confusion Matrix

It is a matrix of dimension N x N, where N is the number of classes being predicted. The confusion matrix is a presentation of the accuracy of a model with two or more classes. The table presents predictions on the x-axis and accuracy outcomes on the y-axis.

There are four possible options:

- True positives (TP), which are the instances that are positives and are classified as positives.

- False positives (FP), which are the instances that are negatives and are classified as positives.

- False negatives (FN), which are the instances that are positives and are classified as negatives.

- True negatives (TN), which are the instances that are negatives and are classified as negatives.

Below is an example code to compute confusion matrix, using ScikitLearn.

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.metrics import confusion_matrix

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

model = LogisticRegression()

model.fit(X_train,y_train)

preds = model.predict(X_test)

print(confusion_matrix(preds,y_test))

Gain and Lift Chart.

Many times the measure of the overall effectiveness of the model is not enough. It may be important to know if the model does increasingly better with more data. Is there any marginal improvement in the model’s predictive ability if for example, we consider 70% of the data versus only 50%?

The lift charts represent the actual lift for each percentage of the population, which is defined as the ratio between the percentage of positive instances found by using the model and without using it.

These types of charts are common in business analytics of Direct Marketing where the problem is to identify if a particular prospect was worth calling.

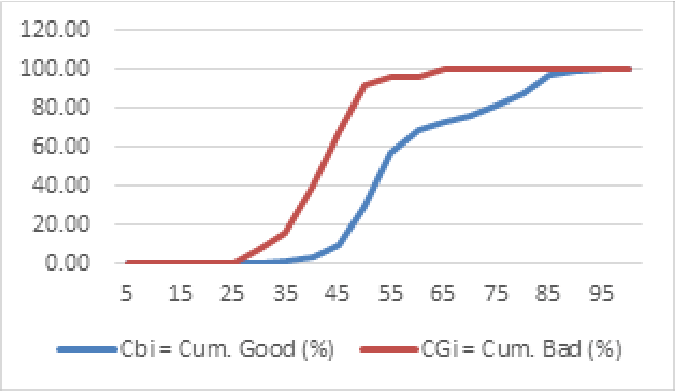

Kolmogorov-Smirnov Chart.

This non-parametric statistical test is used to compare two distributions, to assess how close they are to each other. In this context, one of the distributions is the theoretical distribution that the observations are supposed to follow (usually a continuous distribution with one or two parameters, such as Gaussian), while the other distribution is the actual, empirical, parameter-free, discrete distribution computed on the observations.

KS is maximum difference between % cumulative Goods and Bads distribution across score/probability bands. The gains table typically has % cumulative Goods (or Event) and % Cumulative Bads (Or Non-event) across 10/20 score bands. Using gains table, we can find the KS for the model which has been used for creating gains table.

KS is point estimate, meaning it is only one value and indicate the score/probability band where separate between Goods (or Event) and Bads (or Non-event) is maximum.

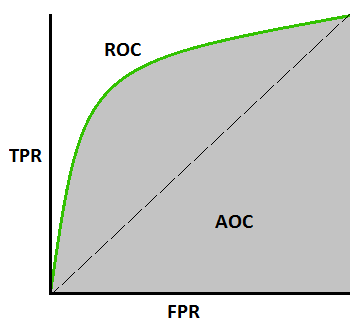

Area Under the ROC curve (AUC – ROC)

The ROC curve is almost independent of the response rate. The Receiver Operating Characteristic (ROC), or ROC curve, is a graphical plot that illustrates the performance of a binary classifier. The curve is created by plotting the true positive rate (TP) against the false positive rate (FP) at various threshold settings. This is one of the popular metrics used in the industry. The biggest advantage of using ROC curve is that it is independent of the change in

The biggest advantage of ROC curve is that it is independent of the change in the proportion of responders.

An area under ROC Curve (or AUC) is a performance metric for binary classification problems. The AUC represents a model’s ability to discriminate between positive and negative classes. An area of 1.0 represents a model that made all predictions perfectly. An area of 0.5 represents a model as good as random.

The example below provides a demonstration of calculating AUC.

import numpy as np

from sklearn.metrics import roc_auc_score

y_true = np.array([0, 0, 1, 1])

y_scores = np.array([0.1, 0.4, 0.35, 0.8])

roc_auc_score(y_true, y_scores)

Gini Coefficient

Gini coefficient is used in classification problems. It can be derived from the AUC ROC number.

from sklearn.linear_model import LogisticRegression

from sklearn import datasets

from sklearn.cross_validation import train_test_split

def gini(list_of_values):

sorted_list = sorted(list(list_of_values))

height, area = 0, 0

for value in sorted_list:

height += value

area += height - value / 2.

fair_area = height * len(list_of_values) / 2

return (fair_area - area) / fair_area

def normalized_gini(y_pred, y):

normalized_gini = gini(y_pred)/gini(y)

return normalized_gini

data = datasets.load_iris()

X = data['data']

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

model = LogisticRegression()

model.fit(X_train,y_train)

preds = model.predict(X_test)

print(normalized_gini(preds,y_test))

Logarithmic Loss

Log Loss quantifies the accuracy of a classifier by penalizing false classifications. Minimizing the Log Loss is basically equivalent to maximizing the accuracy of the classifier.

In order to calculate Log Loss, the classifier must assign a probability to each class rather than simply give the most likely class. A perfect classifier would have a Log Loss of precisely zero. Less ideal classifiers have progressively larger values of Log Loss.

Mean Absolute Error

The mean absolute error (MAE) is a quantity used to measure how close forecasts or predictions are to the eventual outcomes.

The Mean Absolute Error (or MAE) is the sum of the absolute differences between predictions and actual values. The measure gives an idea of the magnitude of the error, but no idea of the direction.

The example below demonstrates simple example:

from sklearn.metrics import mean_absolute_error

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

print(mean_absolute_error(y_true, y_pred))

Mean Squared Error

One of the most common measures used to quantify the performance of the model. It is an average of the squares of the difference between the actual observations and those predicted. The squaring of the errors tends to heavily weight statistical outliers, affecting the accuracy of the results.

Below a basic example of calculation using ScikitLearn.

from sklearn.metrics import mean_squared_error

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

print(mean_squared_error(y_true, y_pred))

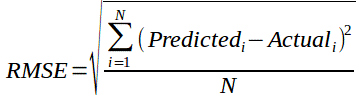

Root Mean Squared Error (RMSE)

RMSE is the most popular evaluation metric used in regression problems. It follows an assumption that error is unbiased and follow a normal distribution.

RMSE metric is given by:

Where N is Total Number of Observations.

R Squared Metric

The R^2 (or R Squared) metric provides an indication of the goodness of fit of a set of predictions to the actual values. In statistical literature, this measure is called the coefficient of determination. This is a value between 0 and 1 for no-fit and perfect fit respectively.

Below a basic example of calculation using ScikitLearn.

from sklearn.metrics import r2_score

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

print(r2_score(y_true, y_pred))

Post a Comment

No Comments